The next area of iOS touchscreen event handling that we will look at in this book involves the detection of gestures involving movement. As covered in a previous chapter, a gesture refers to the activity that takes place between a finger touching the screen and the finger then being lifted from the screen. In the chapter entitled An Example iOS 17 Touch, Multitouch, and Tap App, we dealt with touches that did not involve movement across the screen surface. We will now create an example that tracks the coordinates of a finger as it moves across the screen.

Note that the assumption made throughout this chapter is that the reader has already reviewed the An Overview of iOS 17 Multitouch, Taps, and Gestures chapter.

The Example iOS 17 Gesture App

This example app will detect when a single touch is made on the screen of the iPhone or iPad and then report the coordinates of that finger as it is moved across the screen surface.

Creating the Example Project

Begin by launching Xcode and creating a new project using the iOS App template with the Swift and Storyboard options selected, entering TouchMotion as the product name.

Designing the App User Interface

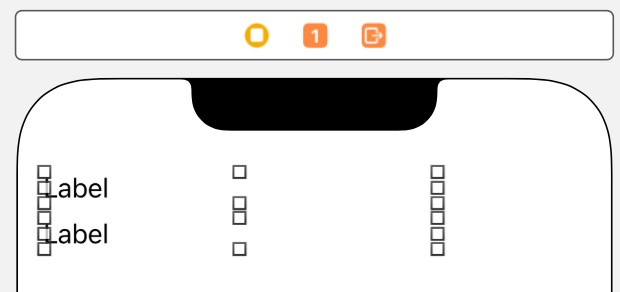

The app will display the X and Y coordinates of the touch and update these values in real-time as the finger moves across the screen. When the finger is lifted from the screen, the start and end coordinates of the gesture will then be displayed on two label objects in the user interface. Select the Main.storyboard file and, using Interface Builder, create a user interface that resembles the layout in Figure 54-1.

Be sure to stretch the labels so that they both extend to cover a little over half of the width of the view layout.

Select the top label object in the view canvas, display the Assistant Editor panel, and verify that the editor is displaying the contents of the ViewController.swift file. Ctrl-click on the same label object and drag it to a position just below the class declaration line in the Assistant Editor. Release the line, and in the resulting connection dialog, establish an outlet connection named xCoord. Repeat this step to establish an outlet connection to the second label object named yCoord.

Next, review the ViewController.swift file to verify that the outlets are correct, then declare a property in which to store the coordinates of the start location on the screen:

import UIKit

class ViewController: UIViewController {

@IBOutlet weak var xCoord: UILabel!

@IBOutlet weak var yCoord: UILabel!

var startPoint: CGPoint!

.

.

.

}Code language: Swift (swift)Implementing the touchesBegan Method

When the user touches the screen, the location coordinates need to be saved in the startPoint instance variable, and those coordinates need to be reported to the user. This can be achieved by implementing the touchesBegan method in the ViewController.swift file as follows:

override func touchesBegan(_ touches: Set<UITouch>, with event: UIEvent?) {

if let theTouch = touches.first {

startPoint = theTouch.location(in: self.view)

if let x = startPoint?.x, let y = startPoint?.y {

xCoord.text = ("x = \(x)")

yCoord.text = ("y = \(y)")

}

}

}Code language: Swift (swift)Implementing the touchesMoved Method

When the user’s finger moves across the screen, the touchesMoved event will be called repeatedly until the motion stops. By implementing the touchesMoved method in our view controller, therefore, we can detect the motion and display the revised coordinates to the user:

override func touchesMoved(_ touches: Set<UITouch>, with event: UIEvent?) {

if let theTouch = touches.first {

let touchLocation = theTouch.location(in: self.view)

let x = touchLocation.x

let y = touchLocation.y

xCoord.text = ("x = \(x)")

yCoord.text = ("y = \(y)")

}

}Code language: Swift (swift)Implementing the touchesEnded Method

When the user’s finger lifts from the screen, the touchesEnded method of the first responder is called. The final task, therefore, is to implement this method in our view controller such that it displays the end point of the gesture:

override func touchesEnded(_ touches: Set<UITouch>, with event: UIEvent?) {

if let theTouch = touches.first {

let endPoint = theTouch.location(in: self.view)

let x = endPoint.x

let y = endPoint.y

xCoord.text = ("x = \(x)")

yCoord.text = ("y = \(y)")

}

}Code language: Swift (swift)Building and Running the Gesture Example

Build and run the app using the run button in the toolbar of the main Xcode project window. When the app starts (either in the iOS Simulator or on a physical device), touch the screen and drag it to a new location before lifting your finger from the screen (or the mouse button in the case of the iOS Simulator). During the motion, the current coordinates will update in real-time. Once the gesture is complete, the end location of the movement will be displayed.

Summary

By implementing the standard touch event methods, the motion of a gesture can easily be tracked by an iOS app. However, much of a user’s interaction with apps involves specific gesture types, such as swipes and pinches. Therefore, writing code correlating finger movement on the screen with a specific gesture type would be extremely complex. Fortunately, iOS makes this task easy through the use of gesture recognizers. In the next chapter, entitled Identifying Gestures using iOS 17 Gesture Recognizers, we will look at this concept in more detail.