Having covered the theory of gesture recognition on iOS in the chapter entitled Identifying Gestures using iOS 17 Gesture Recognizers, this chapter will work through an example application intended to demonstrate the use of the various UIGestureRecognizer subclasses.

The application created in this chapter will configure recognizers to detect a number of different gestures on the iPhone or iPad display and update a status label with information about each recognized gesture.

Creating the Gesture Recognition Project

Begin by invoking Xcode and creating a new iOS App project named Recognizer using Swift as the programming language.

Designing the User Interface

The only visual component that will be present on our UIView object will be the label used to notify the user of the type of gesture detected. Since the text displayed on this label will need to be updated from within the application code it will need to be connected to an outlet. In addition, the view controller will also contain five gesture recognizer objects to detect pinches, taps, rotations, swipes, and long presses. When triggered, these objects will need to call action methods to update the label with a notification to the user that the corresponding gesture has been detected.

Select the Main.storyboard file and drag a Label object from the Library panel to the center of the view. Once positioned, display the Auto Layout Resolve Auto Layout Issues menu and select the Reset to Suggested Constraints menu option listed in the All Views in View Controller section of the menu.

Select the label object in the view canvas, display the Assistant Editor panel, and verify that the editor is displaying the contents of the ViewController.swift file. Ctrl-click on the same label object and drag to a position just below the class declaration line in the Assistant Editor. Release the line and, in the resulting connection dialog, establish an outlet connection named statusLabel.

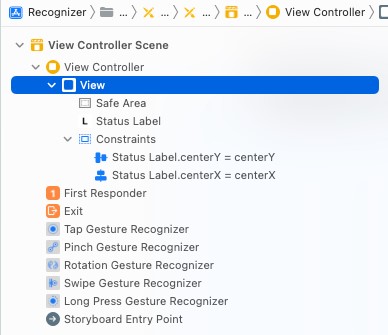

Next, the non-visual gesture recognizer objects need to be added to the design. Scroll down the list of objects in the Library panel until the Tap Gesture Recognizer object comes into view. Drag and drop the object onto the View in the design area (if the object is dropped outside the view, the connection between the recognizer and the view on which the gestures are going to be performed will not be established). Repeat these steps to add Pinch, Rotation, Swipe and Long Press Gesture Recognizer objects to the design. Note that the document outline panel (which can be displayed by clicking on the panel button in the lower left-hand corner of the storyboard panel) has updated to reflect the presence of the gesture recognizer objects, as illustrated in Figure 56-1. An icon for each recognizer added to the view also appears within the toolbar across the top of the storyboard scene.

Select the Tap Gesture Recognizer instance within the document outline panel and display the Attributes Inspector. Within the attributes panel, change the Taps value to 2 so that only double taps are detected.

Similarly, select the Long Press Recognizer object and change the Min Duration attribute to 3 seconds.

Having added and configured the gesture recognizers, the next step is to connect each recognizer to its corresponding action method.

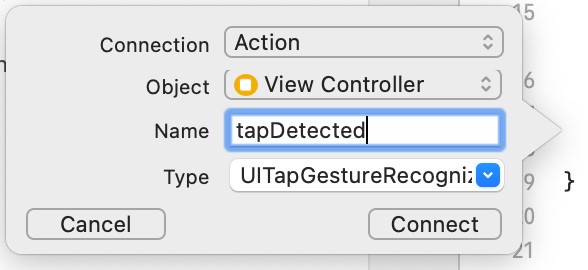

Display the Assistant Editor and verify that it is displaying the content of ViewController.swift. Ctrl-click on the Tap Gesture Recognizer object either in the document outline panel or in the scene toolbar and drag the line to the area immediately beneath the viewDidLoad method in the Assistant Editor panel. Release the line and, within the resulting connection dialog, establish an Action method configured to call a method named tapDetected with the Type value set to UITapGestureRecognizer as illustrated in Figure 56-2:

Repeat these steps to establish action connections for the pinch, rotation, swipe and long press gesture recognizers to methods named pinchDetected, rotationDetected, swipeDetected and longPressDetected respectively, taking care to select the corresponding type value for each action.

Implementing the Action Methods

Having configured the gesture recognizers, the next step is to add code to the action methods that will be called by each recognizer when the corresponding gesture is detected. The methods stubs created by Xcode reside in the ViewController.swift file and will update the status label with information about the detected gesture:

@IBAction func tapDetected(_ sender: UITapGestureRecognizer) {

statusLabel.text = "Double Tap"

}

@IBAction func pinchDetected(_ sender: UIPinchGestureRecognizer) {

let scale = sender.scale

let velocity = sender.velocity

let resultString =

"Pinch - scale = \(scale), velocity = \(velocity)"

statusLabel.text = resultString

}

@IBAction func rotationDetected(_ sender: UIRotationGestureRecognizer) {

let radians = sender.rotation

let velocity = sender.velocity

let resultString =

"Rotation - Radians = \(radians), velocity = \(velocity)"

statusLabel.text = resultString

}

@IBAction func swipeDetected(_ sender: UISwipeGestureRecognizer) {

statusLabel.text = "Right swipe"

}

@IBAction func longPressDetected(_ sender: UILongPressGestureRecognizer) {

statusLabel.text = "Long Press"

}Code language: Swift (swift)Testing the Gesture Recognition Application

The final step is to build and run the application. Once the application loads on the device, perform the appropriate gestures on the display and watch the status label update accordingly. If using a simulator session, hold down the Option key while clicking with the mouse to simulate two touches for the pinch and rotation tests. Note that when testing on an iPhone, it may be necessary to rotate the device into landscape orientation to be able to see the full text displayed on the label.

Summary

The iOS SDK includes a set of gesture recognizer classes designed to detect swipe, tap, long press, pan, pinch, and rotation gestures. This chapter has worked through creating an example application that demonstrates how to implement gesture detection using these classes within the Interface Builder environment.