Most Android-based devices use a touch screen as the primary interface between the user and the device. The previous chapter introduced how a touch on the screen translates into an action within a running Android application. There is, however, much more to touch event handling than responding to a single finger tap on a view object. Most Android devices can, for example, detect more than one touch at a time. Nor are touches limited to a single point on the device display. Touches can be dynamic as the user slides one or more contact points across the screen’s surface.

An application can also interpret touches as a gesture. Consider, for example, that a horizontal swipe is typically used to turn the page of an eBook or how a pinching motion can zoom in and out of an image displayed on the screen.

An application can also interpret touches as a gesture. Consider, for example, that a horizontal swipe is typically used to turn the page of an eBook or how a pinching motion can zoom in and out of an image displayed on the screen.

This chapter will explain the handling of touches that involve motion and explore the concept of intercepting multiple concurrent touches. The topic of identifying distinct gestures will be covered in the next chapter.

Intercepting Touch Events

A view object can intercept touch events by registering an onTouchListener event listener and implementing the corresponding onTouch() callback method. The following code, for example, ensures that any touches on a ConstraintLayout view instance named myLayout result in a call to the onTouch() method:

binding.myLayout.setOnTouchListener(

new ConstraintLayout.OnTouchListener() {

public boolean onTouch(View v, MotionEvent m) {

// Perform tasks here

return true;

}

}

);Code language: Java (java)As indicated in the code example, the onTouch() callback is required to return a Boolean value indicating to the Android runtime system whether or not the event should be passed on to other event listeners registered on the same view or discarded. The method is passed both a reference to the view on which the event was triggered and an object of type MotionEvent.

The MotionEvent Object

The MotionEvent object passed through to the onTouch() callback method is the key to obtaining information about the event. Information within the object includes the location of the touch within the view and the type of action performed. The MotionEvent object is also the key to handling multiple touches.

Understanding Touch Actions

An important aspect of touch event handling involves identifying the type of action the user performed. The type of action associated with an event can be obtained by making a call to the getActionMasked() method of the MotionEvent object, which was passed through to the onTouch() callback method. When the first touch on a view occurs, the MotionEvent object will contain an action type of ACTION_DOWN together with the coordinates of the touch. When that touch is lifted from the screen, an ACTION_UP event is generated. Any motion of the touch between the ACTION_DOWN and ACTION_UP events will be represented by ACTION_ MOVE events.

When more than one touch is performed simultaneously on a view, the touches are referred to as pointers. In a multi-touch scenario, pointers begin and end with event actions of type ACTION_POINTER_DOWN and ACTION_POINTER_UP, respectively. To identify the index of the pointer that triggered the event, the getActionIndex() callback method of the MotionEvent object must be called.

Handling Multiple Touches

The chapter entitled An Android Java Touch and Multi-touch Event Tutorial began exploring event handling within the narrow context of a single-touch event. In practice, most Android devices can respond to multiple consecutive touches (though it is important to note that the number of simultaneous touches that can be detected varies depending on the device).

As previously discussed, each touch in a multi-touch situation is considered by the Android framework to be a pointer. Each pointer, in turn, is referenced by an index value and assigned an ID. The current number of pointers can be obtained via a call to the getPointerCount() method of the current MotionEvent object. The ID for a pointer at a particular index in the list of current pointers may be obtained via a call to the MotionEvent getPointerId() method. For example, the following code excerpt obtains a count of pointers and the ID of the pointer at index 0:

public boolean onTouch(View v, MotionEvent m) {

int pointerCount = m.getPointerCount();

int pointerId = m.getPointerId(0);

return true;

}Code language: Java (java)Note that the pointer count will always be greater than or equal to 1 when the onTouch listener is triggered (since at least one touch must have occurred for the callback to be triggered).

A touch on a view, particularly one involving motion across the screen, will generate a stream of events before the point of contact with the screen is lifted. An application will likely need to track individual touches over multiple touch events. While the ID of a specific touch gesture will not change from one event to the next, it is important to remember that the index value will change as other touch events come and go. When working with a touch gesture over multiple events, the ID value must be used as the touch reference to ensure the same touch is being tracked. When calling methods that require an index value, this should be obtained by converting the ID for a touch to the corresponding index value via a call to the findPointerIndex() method of the MotionEvent object.

An Example Multi-Touch Application

The example application created in the remainder of this chapter will track up to two touch gestures as they move across a layout view. As the events for each touch are triggered, the coordinates, index, and ID for each touch will be displayed on the screen.

Select the New Project option from the welcome screen and, within the resulting new project dialog, choose the Empty Views Activity template before clicking on the Next button.

Enter MotionEvent into the Name field and specify com.ebookfrenzy.motionevent as the package name. Before clicking the Finish button, change the Minimum API level setting to API 26: Android 8.0 (Oreo) and the Language menu to Java. Adapt the project to use view binding as outlined in section Android View Binding in Java.

Designing the Activity User Interface

The user interface for the application’s sole activity is to consist of a ConstraintLayout view containing two TextView objects. Within the Project tool window, navigate to app -> res -> layout and double-click on the activity_main.xml layout resource file to load it into the Android Studio Layout Editor tool.

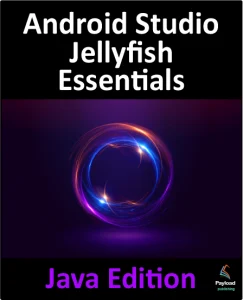

Select and delete the default “Hello World!” TextView widget and then, with autoconnect enabled, drag and drop a new TextView widget so that it is centered horizontally and positioned at the 16dp margin line on the top edge of the layout:

Drag a second TextView widget and position and constrain it so that a 32dp margin distances it from the bottom of the first widget:

Using the Attributes tool window, change the IDs for the TextView widgets to textView1 and textView2, respectively. Change the text displayed on the widgets to read “Touch One Status” and “Touch Two Status” and extract the strings to resources using the warning button in the top right-hand corner of the Layout Editor.

Implementing the Touch Event Listener

To receive touch event notification, it will be necessary to register a touch listener on the layout view within the onCreate() method of the MainActivity activity class. Select the MainActivity.java tab from the Android Studio editor panel to display the source code. Within the onCreate() method, add code to register the touch listener and implement code which, in this case, is going to call a second method named handleTouch() to which is passed the MotionEvent object:

package com.ebookfrenzy.motionevent;

import androidx.appcompat.app.AppCompatActivity;

import androidx.constraintlayout.widget.ConstraintLayout;

import android.os.Bundle;

import android.view.MotionEvent;

import android.view.View;

import com.ebookfrenzy.motionevent.databinding.ActivityMainBinding;

public class MainActivity extends AppCompatActivity {

private ActivityMainBinding binding;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

binding = ActivityMainBinding.inflate(getLayoutInflater());

View view = binding.getRoot();

setContentView(view);

binding.activityMain.setOnTouchListener(

new ConstraintLayout.OnTouchListener() {

public boolean onTouch(View v, MotionEvent m) {

handleTouch(m);

return true;

}

}

);

}Code language: Java (java)When we designed the user interface, the parent ConstraintLayout was not assigned an ID that would allow us to access it via the view binding mechanism. Since this layout component is the topmost component in the UI layout hierarchy, we have been able to reference it using the root binding property in the code above.

Before testing the application, the final task is to implement the handleTouch() method called by the listener. The code for this method reads as follows:

void handleTouch(MotionEvent m) {

int pointerCount = m.getPointerCount();

for (int i = 0; i < pointerCount; i++)

{

int x = (int) m.getX(i);

int y = (int) m.getY(i);

int id = m.getPointerId(i);

int action = m.getActionMasked();

int actionIndex = m.getActionIndex();

String actionString;

switch (action)

{

case MotionEvent.ACTION_DOWN:

actionString = "DOWN";

break;

case MotionEvent.ACTION_UP:

actionString = "UP";

break;

case MotionEvent.ACTION_POINTER_DOWN:

actionString = "PNTR DOWN";

break;

case MotionEvent.ACTION_POINTER_UP:

actionString = "PNTR UP";

break;

case MotionEvent.ACTION_MOVE:

actionString = "MOVE";

break;

default:

actionString = "";

}

String touchStatus = "Action: " + actionString + " Index: " + actionIndex + " ID: " + id + " X: " + x + " Y: " + y;

if (id == 0)

binding.textView1.setText(touchStatus);

else

binding.textView2.setText(touchStatus);

}

}Code language: Java (java)Before compiling and running the application, it is worth taking the time to walk through this code systematically to highlight the tasks performed.

The code begins by obtaining references to the two TextView objects in the user interface and identifying how many pointers are currently active on the view:

TextView textView1 = findViewById(R.id.textView1);

TextView textView2 = findViewById(R.id.textView2);

int pointerCount = m.getPointerCount();Code language: Java (java)Next, the pointerCount variable initiates a for loop, which performs tasks for each active pointer. The first few lines of the loop obtain the X and Y coordinates of the touch together with the corresponding event ID, action type, and action index. Lastly, a string variable is declared:

for (int i = 0; i < pointerCount; i++)

{

int x = (int) m.getX(i);

int y = (int) m.getY(i);

int id = m.getPointerId(i);

int action = m.getActionMasked();

int actionIndex = m.getActionIndex();

String actionString;Code language: Java (java)Since action types equate to integer values, a switch statement is used to convert the action type to a more meaningful string value, which is stored in the previously declared actionString variable:

switch (action)

{

case MotionEvent.ACTION_DOWN:

actionString = "DOWN";

break;

case MotionEvent.ACTION_UP:

actionString = "UP";

break;

case MotionEvent.ACTION_POINTER_DOWN:

actionString = "PNTR DOWN";

break;

case MotionEvent.ACTION_POINTER_UP:

actionString = "PNTR UP";

break;

case MotionEvent.ACTION_MOVE:

actionString = "MOVE";

break;

default:

actionString = "";

}Code language: Java (java)Finally, the string message is constructed using the actionString value, the action index, touch ID, and X and Y coordinates. The ID value is then used to decide whether the string should be displayed on the first or second TextView object:

String touchStatus = "Action: " + actionString + " Index: "

+ actionIndex + " ID: " + id + " X: " + x + " Y: " + y;

if (id == 0)

binding.textView1.setText(touchStatus);

else

binding.textView2.setText(touchStatus);Code language: Java (java)Running the Example Application

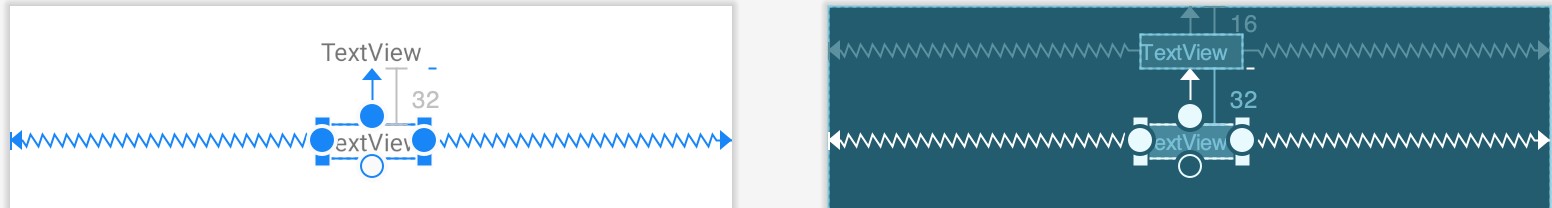

Compile and run the application and, once launched, experiment with single and multiple touches on the screen and note that the text views update to reflect the events as illustrated in Figure 27-3. When running on an emulator, multiple touches may be simulated by holding down the Ctrl (Cmd on macOS) key while clicking the mouse button (note that simulating multiple touches may not work if the emulator is running in a tool window):

Summary

Activities receive notifications of touch events by registering an onTouchListener event listener and implementing the onTouch() callback method, which, in turn, is passed a MotionEvent object when called by the Android runtime. This object contains information about the touch, such as the type of touch event, the coordinates of the touch, and a count of the number of touches currently in contact with the view.

When multiple touches are involved, each point of contact is referred to as a pointer, with each assigned an index and an ID. While the index of a touch can change from one event to another, the ID will remain unchanged until the touch ends.

This chapter has worked through creating an example Android application designed to display the coordinates and action type of up to two simultaneous touches on a device display.

Having covered touches in general, the next chapter (entitled “Detecting Common Gestures Using the Android Gesture Detector Class”) will look further at touchscreen event handling through gesture recognition.